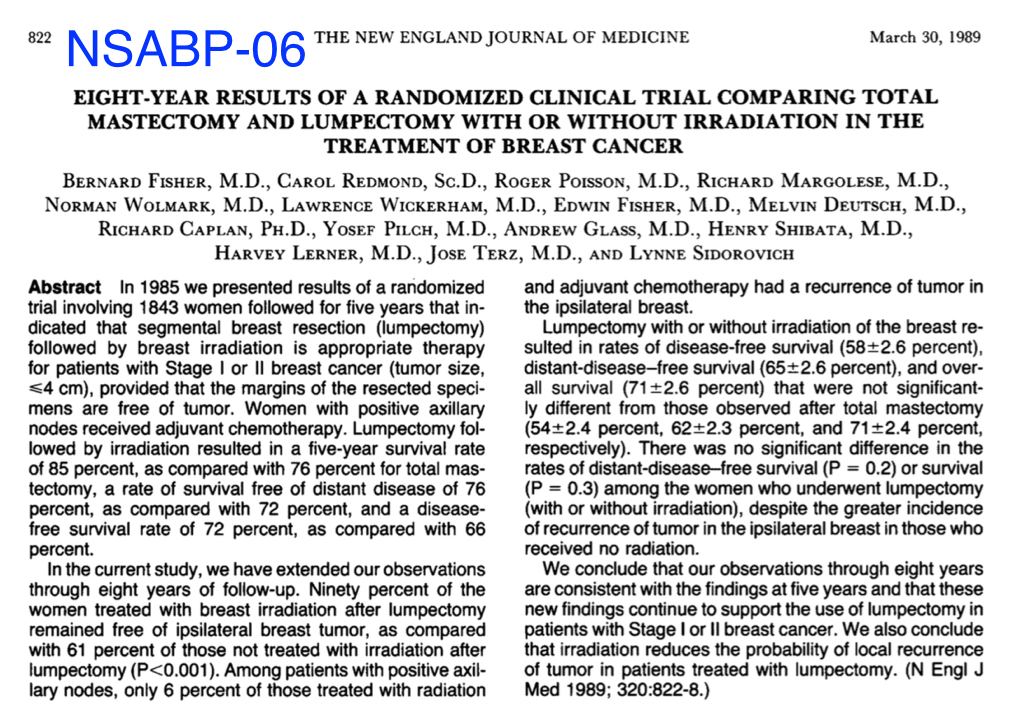

What about all the examples of research which appear to lead to better patient care? What about a trial like NSABP-06 which has changed the way we treat breast cancer? We all tend to think that there is a direct path from clinical research to improvements in clinical patient care: that a given study shows a positive outcome and that clinical practice then adopts this as the standard. A classic example would be the NSABP-06 study on breast cancer. Some of you may recall, this was the big randomized trial which appeared to change our thinking about doing mastectomies for breast cancer. It compared segmental mastectomy with and without radiotherapy versus total mastectomy and showed there was no difference with regard to local recurrence.

There is a tendency to think that the reason we treat many woman nowadays with segmental mastectomy and radiotherapy instead of with a total mastectomy is because of NSABP-06. But I would suggest that is a misleading conclusion to draw. I would argue that we do not treat woman with segmental mastectomy and radiation because of NSABP-06. We treat women this way because it actually works in our day to day practices. Because when we treat women with a segmental mastectomy and radioetheapy they do well and do not get a whole bunch of local recurrences. What is the difference, you may be asking? Think of it this way: clinical medicine functions a great deal like a marketplace. Treatments come and go all the time, but the marketplace of medical care eventually sorts out what really works. The reasons we treat breast cancer with segmental mastectomy and RT is because the marketplace of medicine tried it out and found it acceptable. It worked in practice. The study NSABP-06 may have played an ancillary role, but it was by no means the deciding factor.

How do I know this? Well, because we have all witnessed countless studies which show a benefit for a given intervention or treatment and which, when adopted by physicians, just do not pan out. The fistula plug treatment, which I mentioned earlier, is a classic example of this phenomenon. Despite the initial positive results, it soon became clear that the marketplace of practitioners just found that it did not work. And it very quickly faded away to become a footnote. This may sound counter-intuitive, but it actually makes a lot of sense. First, the reason why a study is done in the first place is because there is a general feeling that a specific intervention might work best. In the case of NSABP-06, the idea of segmental mastectomy and RT did not arbitrarily come out of thin air. There was plenty of scepticism regarding the effectiveness of total mastectomy and it was clear that people had been experimenting with segmental mastectomy for quite a while before NSABP-06. Otherwise, the idea of doing the study in the first place would never have come up. When the results appeared to show comparable outcomes for segmental mastectomy and radiotherapy vs total mastectomy, there were some hold-outs in the area of total mastectomy, but over a period of time, most surgeons adopted segmental mastectomy with radiotherapy with relative ease and it became the de facto standard of care. There were also external factors contributing to this, such as a desire on the part of both physicians and women to avoid disfiguring surgery. But the real reason why it has remained the standard of care and why we still treat women with segmental mastectomy and radiotherapy is not because of NSABP-06. It is because segmental mastectomy with radiotherapy simply worked in practice. Surgeons and oncologists who treated these patients did not find a whole bunch of bad local recurrences, or complications. And women seemed quite satisfied with the results of preserving their breasts. The trial, NSABP-06 undoubtedly had some influence in the process, but was by no means the decisive one. There is a complex interplay, an essential tension between the clinical evidence and practice of medicine which leads to retention of new practices.

I know, this may not seem terribly scientific and perhaps even a little bit disappointing, given the pedestal upon which we place medical research. But it is the way clinical medicine really works. And given the inherent unreliability of most quantitative research, its not actually such a bad thing to let the market sort it out. Let me be clear, I do believe that quantitative research plays a role. But it is not a simple straight-forward process: such as a given study shows X works better than Y, therefore we all do X over Y. On the contrary, there is a give and take between clinical research and the market of medical practice. Anyone who has practiced medicine for more than 10 years will be recognize the following pattern:

- A new intervention or approach is studied which shows great promise.

- We all jump on the bandwagon and try it out.

- After a while, it loses favour among physicians who find it doesn’t seem to hold up in practice.

- Various practitioners start to disembark from the bandwagon.

- Repeat studies are carried out showing that the treatment is not really very effective

- The treatment is abandoned by all but a few die-hards.

- The treatment slowly fades away and becomes a historical footnote.

There are countless examples of this pattern and no end of novel studies which appear to revolutionize the management of various conditions, but the vast majority of them disappear after a few years, in spite of their initial promise. Why? Because the market-place of medical practice found that in spite of the studies, the treatment or intervention just didn’t work in the real world. And we now understand why the initial studies may have been so promising, even though they subsequently failed in practice: because quantification of medical phenomena is such a crude tool and it misrepresents and distorts the phenomenon we are exploring. What happens invariably in these scenarios is that studies then come out contradicting the original studies and ultimately show that the intervention does not work very well. This was the case with the fistula plug. It is a classic Chicken-Egg problem. What comes first, the chicken of research or the Egg of practice.

One final comment in this regard. There is an old adage in Medicine which many of you will undoubtedly have heard at some point. “Never be the first to adopt a new treatment and never be the last”. At the core of this old adage is the Market-place analogy. When a new treatment comes out, no matter how promising it may appear, don’t jump on the band-wagon til its proven itself in the market.